Baltimore Orioles Vs San Francisco Giants Match Player Stats – A Detailed Overview!

July 3, 2025

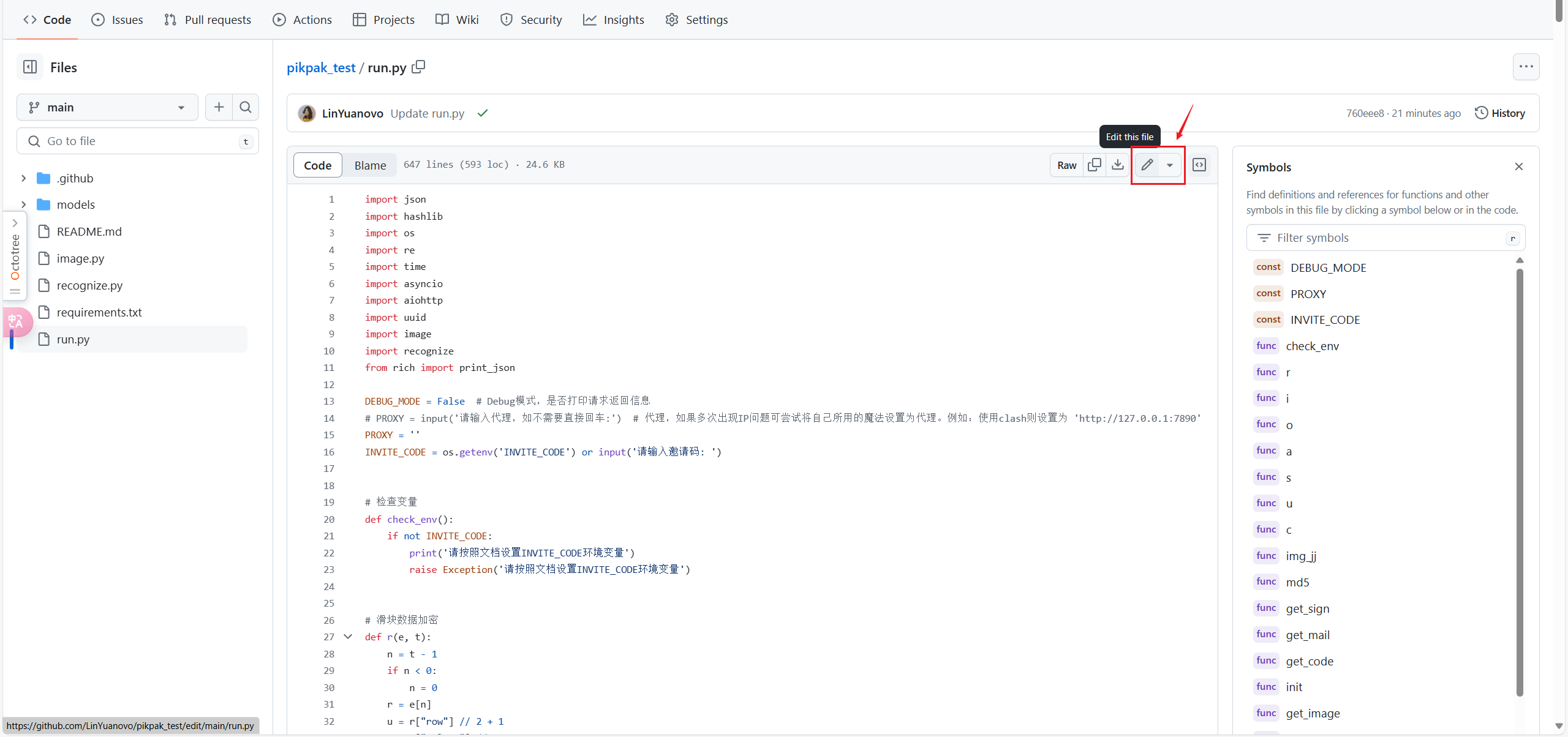

Pikpak_Auto_Invite – Unlock Seamless Sharing and Growth on PikPak!

July 4, 2025As artificial intelligence (AI) evolves from theoretical innovation to real-world necessity, one concern persists across industries and communities — trust. How do we trust machines that make decisions for us? Enter Explainable AI (XAI) — a transformative approach that ensures clarity, transparency, and accountability in automated systems. And at the cutting edge of this movement is an emerging name: XAI770K.

Though not widely documented yet, XAI770K is drawing attention as a design philosophy, framework, or prototype model that emphasizes interpretable, scalable AI across various sectors. This article explores what XAI770K could represent, how it aligns with ethical AI goals, and why it may be the future of intelligent decision-making.

What Is XAI770K?

XAI770K appears to be a term derived from the fusion of two key elements:

- XAI: Short for Explainable Artificial Intelligence, it refers to AI models that are not only functional but understandable to humans.

- 770K: This suffix may reference a parameter count, dataset size, or model identifier, indicating a mid-to-large scale system.

In context, XAI770K is likely a comprehensive model or toolset built to deliver AI outcomes that humans can understand, evaluate, and refine. It bridges the gap between raw machine learning outputs and clear, actionable human insight.

Why XAI Matters — and Where XAI770K Fits In

Traditional AI models, especially deep learning networks, are often considered “black boxes.” They can process vast amounts of data and return accurate predictions — but they don’t explain why they made those decisions. This lack of transparency is problematic in critical fields like:

- Healthcare (e.g., diagnosing diseases)

- Finance (e.g., approving loans)

- Legal systems (e.g., predictive policing)

- Defense and security

- Autonomous transportation

XAI770K likely represents a model or system that not only functions at high performance but provides clarity behind every recommendation, alert, or prediction — a feature that’s becoming increasingly crucial for regulatory, ethical, and user experience reasons.

Key Features and Architecture of XAI770K

While concrete technical documentation may still be emerging, based on naming conventions and similar AI systems, XAI770K likely encompasses several key features:

1. Multi-Modal Input Processing

It may support diverse data inputs — text, image, video, audio — to function across complex environments like healthcare imaging, voice-based customer support, or multi-sensor robotics.

2. Layered Interpretability Mechanisms

Unlike opaque AI, XAI770K integrates layers of reasoning. It breaks down complex decisions into understandable steps — akin to a decision tree within a neural net.

3. Real-Time Audit Logs

For each prediction, XAI770K could generate a time-stamped log that records contributing factors and confidence levels, enabling regulatory compliance and auditability.

4. Scalable Deployment

XAI770K is potentially deployable at different scales — from cloud-based enterprise systems to edge devices and embedded AI chips.

5. Secure and Ethical Design

Built with privacy, security, and bias mitigation in mind, XAI770K ensures fairness in decision-making and safeguards sensitive user data.

Potential Use Cases for XAI770K

The power of explainable and scalable AI can be applied across countless industries. Here’s where XAI770K could deliver immediate value:

🏥 1. Healthcare and Medical Diagnostics

- AI that suggests a diagnosis and shows the imaging patterns or lab markers behind its conclusion.

- Helps doctors understand why the model flagged a potential issue and increases trust in AI-assisted care.

💳 2. Finance and Risk Assessment

- Explains why a loan was rejected or approved by listing income patterns, risk scores, and spending habits — all in a legally compliant format.

🚗 3. Autonomous Driving and Transportation

- When self-driving cars make split-second decisions, XAI770K could provide traceable logs of sensory inputs and risk thresholds.

🧠 4. Mental Health and Emotional AI

- Sentiment analysis tools powered by XAI770K can explain emotional assessments and suggest mental wellness actions transparently.

🛡️ 5. Cybersecurity and Threat Detection

- Models using XAI770K could flag suspicious activity and explain how it deviated from normal behavior, enabling fast and accurate response.

How XAI770K Enhances AI Ecosystems

Here are some standout advantages that XAI770K-like systems bring to modern machine learning environments:

- ✅ Transparency: Removes mystery from AI decisions.

- ✅ Human-in-the-loop Compatibility: Lets users intervene or override automated decisions.

- ✅ Bias Detection and Correction: Easily trace and fix prejudiced outcomes.

- ✅ Improved User Trust: Users adopt AI faster when they understand how it works.

- ✅ Compliance Ready: Aligns with global regulations like GDPR and the EU AI Act.

The Rise of Explainable AI: A Global Priority

Governments and tech leaders are actively pushing for regulations and frameworks to ensure ethical AI. The EU, for example, now mandates explanations for automated decisions in several sectors. XAI770K could be the solution architecture that helps companies meet these demands while enhancing product value.

Moreover, as Generative AI tools like ChatGPT, Midjourney, and Claude dominate headlines, the need for guardrails, interpretability, and traceability grows — especially in sectors involving sensitive or life-altering decisions.

Challenges and Considerations

Like any advanced system, XAI770K faces some challenges:

- ⚠️ Complexity vs. Simplicity: Too much data might confuse users instead of clarifying.

- ⚠️ Processing Power: High-parameter models need robust compute resources.

- ⚠️ Training Time: Ensuring accuracy and explainability requires longer, more nuanced model training.

- ⚠️ Interdisciplinary Skills: Developers must understand both machine learning and human-centered design.

Yet, with growing open-source tools and cloud computing, many of these hurdles are becoming more manageable.

Future Outlook of XAI770K

The rise of transparent, ethical, and collaborative AI is inevitable — and XAI770K stands at the forefront of this transformation. Here’s what we may see in the near future:

- 🔮 Visual Dashboards that make AI reasoning accessible to non-technical users

- 🔮 API integrations that allow legacy systems to plug into explainable modules

- 🔮 Synthetic Explainability Models: AI that simulates what a human expert might say

- 🔮 Integration with Blockchain for secure audit trails of AI decisions

- 🔮 AI Regulation Compliance Kits powered by XAI770K templates

FAQ’s

1. What is XAI770K and how does it relate to explainable AI?

XAI770K is a term commonly associated with explainable artificial intelligence (XAI) systems that prioritize transparency and interpretability. It likely refers to a scalable model or framework designed to help humans understand AI decisions, making it ideal for use in critical industries like healthcare, finance, and cybersecurity.

2. How does XAI770K improve AI transparency and user trust?

XAI770K enhances transparency by breaking down complex machine learning outputs into understandable, human-readable formats. It provides clear reasoning behind predictions and decisions, enabling users to trust and validate the AI system’s actions more confidently.

3. In which industries can XAI770K be most effectively applied?

XAI770K can be applied in a variety of sectors, including healthcare (diagnostic support), finance (loan approvals and fraud detection), autonomous driving (decision logs), and cybersecurity (threat analysis). Its explainability makes it ideal for regulated environments requiring detailed audit trails.

4. What makes XAI770K different from traditional black-box AI models?

Unlike traditional black-box models that offer high performance without explanation, XAI770K integrates explainability directly into the model’s architecture. It ensures that every AI output is backed by traceable logic, making it more accountable and compliant with ethical AI standards.

5. Is XAI770K suitable for real-time decision-making applications?

Yes, XAI770K is believed to support real-time processing, making it suitable for applications like autonomous vehicles, emergency response systems, and financial trading — where instant decisions must also be interpretable and justifiable.

Conclusion

XAI770K represents a bold leap forward in making artificial intelligence not only intelligent but also accountable, transparent, and ethical. In an era where black-box algorithms can affect everything from bank loans to medical treatments, tools like XAI770K are essential to rebuilding trust between humans and machines. Whether you’re developing the next-gen app, managing data compliance, or innovating in healthcare, embracing explainable AI frameworks like XAI770K will be a defining move toward a smarter, fairer digital future.